Metrics – Process

1. Introduction

2. Goal & Objectives

The primary goal of this process is to

The process covers the following objectives:

3. Role Model

The following roles are relevant in the context of this process and need to be assigned to persons or teams / groups involved in the process.

| Role | Task | Assigned staff |

| Process Manager (PM) |

|

Vincent |

| Metrics Officer |

|

Vincent |

4. Relevant Business Objects

Business objects are “things” that are required, produced, or processed by the process or one of its activities. The following objects are relevant:

| Business Object | Description |

| Print PDF |

The final PDF generated by the Typesetting Software |

5. Activities

Date Table

The Date Table in phpMyAdmin needs to be updated every month with the last month.

- Go to DateTable

- SQL Tab

To add:

INSERT INTO `DateTable`(`Date`) VALUES ("2021-01-01")

To delete:

DELETE FROM `DateTable` WHERE `Date` = "2021-01-01"

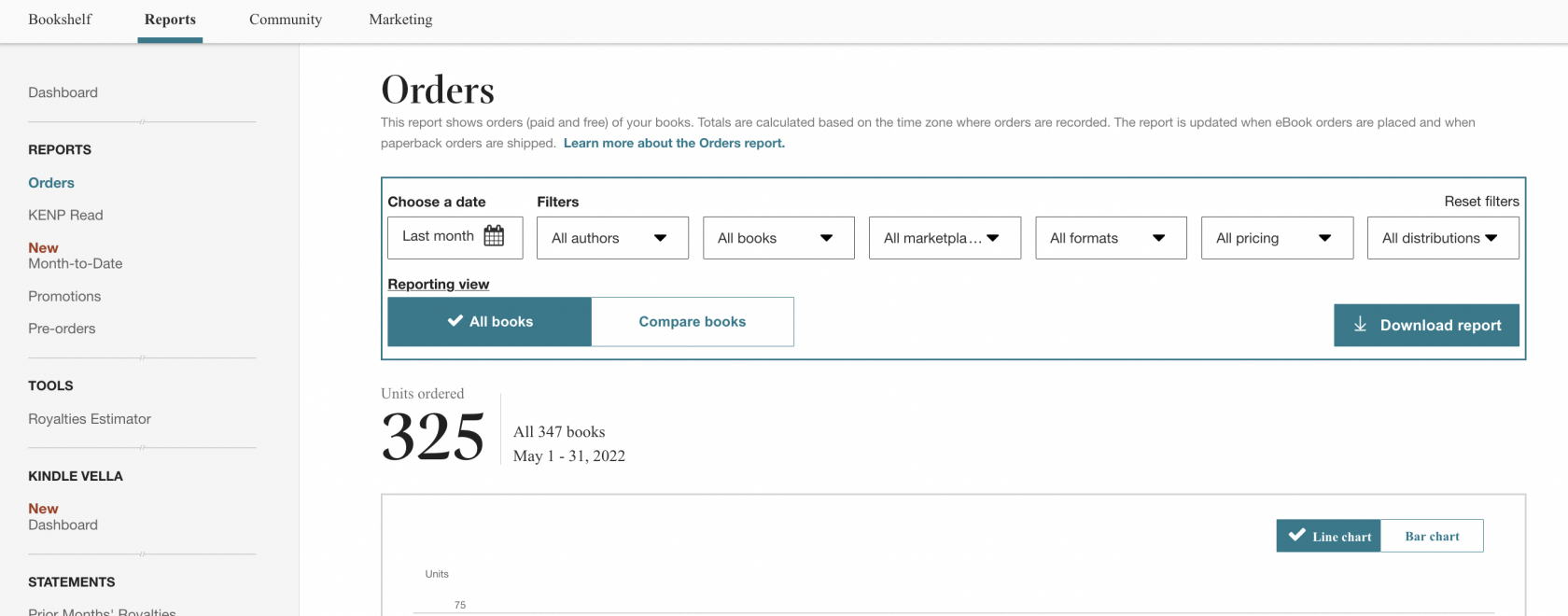

KDP

Each month, download the sales data from KDP through its backend. After 90 days, data are no longer downloadable and data entry should be done manually, which is a pain.

- Click "Reports" in the main menu on top

- Select "Orders" from the leftside menu

- Select the appropriate month

- Click "Download Report"

- Open in LibreOffice

- Tab "Combined Sales"

- Delete table header

- Save as text CSV, comma-delimited

- Upload into phpMyAdmin table "KDP"

JSTOR

JSTOR sends out a monthly email reminders to vincent@punctumbooks.com with a link to the download report, but the links in it are currently broken. So Log In in to JSTOR, and selected "Publisher Reports" from the upper right admin drop down menu.

You need both the Book Usage by Country and by Institution reports.

JSTOR delivers XLSX files.

Country

- Open in LibreOffice

- Change Reporting Period Total into YYYY-MM-01 format

- Delete table header rows.

- Save as text CSV, comma-delimited

- Upload into phpMyAdmin table "JSTOR_Country"

Institution

- Open in LibreOffice

- Change Reporting Period Total into YYYY-MM-01 format

- Delete table header rows.

- Save as text CSV, comma-delimited

- Upload into phpMyAdmin table "JSTOR_Institution"

Project Muse

Somewhere halfway each month, Muse generates the usage data for the previous month. They can be downloaded in the backend.

- Click "Reports an Statistics – Usage Statistics" in the top menu.

- Click "Run Statistics," then "Data Export."

- Enter the pertinent year, month, select "country" "institution," and "CSV," then click "Submit."

Project MUSE delivers CSV files, but they don't contain proper date column. Also, commas inside editor columns create errors in displaying the CSV.

- Open in LibreOffice (make sure to set encoding to UTF-8)

- Append 1 column to the left, add date in YYYY-MM-01 format

- Delete column P "punctum books"

- Delete table header

- Check all rows have proper column count (because of comma issue)

- Upload into phpMyAdmin table "PROJECT_MUSE2"

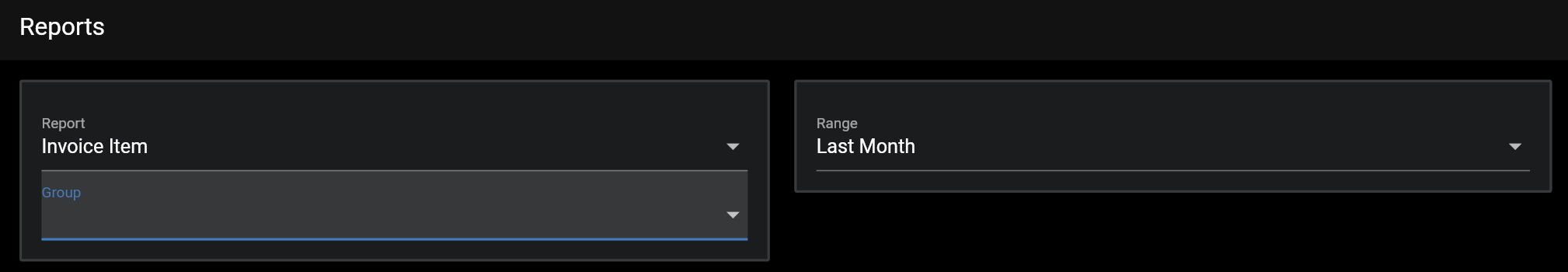

InvoiceNinja

Sales data per product can be conveniently generated under "Reports," organized by Invoice Item. Product numbers in InvoiceNinja correspond to PBNs.

InvoiceNinja delivers CSV files. They suck.

- Open in LibreOffice

- Sort by invoice number

- delete rows with non-book data

- convert Product column to text, append 0 to PBNs

- fix InvoiceDate to 20XX-XX-01

- fix price by removing $ sign

- delete table header

- save as text CSV, comma-delimited

- Upload into phpMyAdmin table "InvoiceNinja"

Lightning Source

The books from the Uitgeverij imprint are hosted through Lightning Source. Reports can be downloaded from the Reports>Print Sales menu item.

Lightning Source delivers XLS files.

- Open in LibreOffice, switch off "space" as a possible delimiter

- Append 1 column to the left, add date in YYYY-MM-01 format

- Delete table header

- Upload into phpMyAdmin table "Lightning_source"

Lightning Source reports have bee discontinued. Their new reports can no longer be exported.

OAPEN

OAPEN-Dashboard provides a download for all countries in CSV format.

- When importing switch off space/semicolon as delimiter

- Delete all columns from H up to present month

- Delete the Funders column

- Reformat column G to YYYY-MM-01 format

- Delete header

- Upload into phpMyAdmin table "OAPEN2"

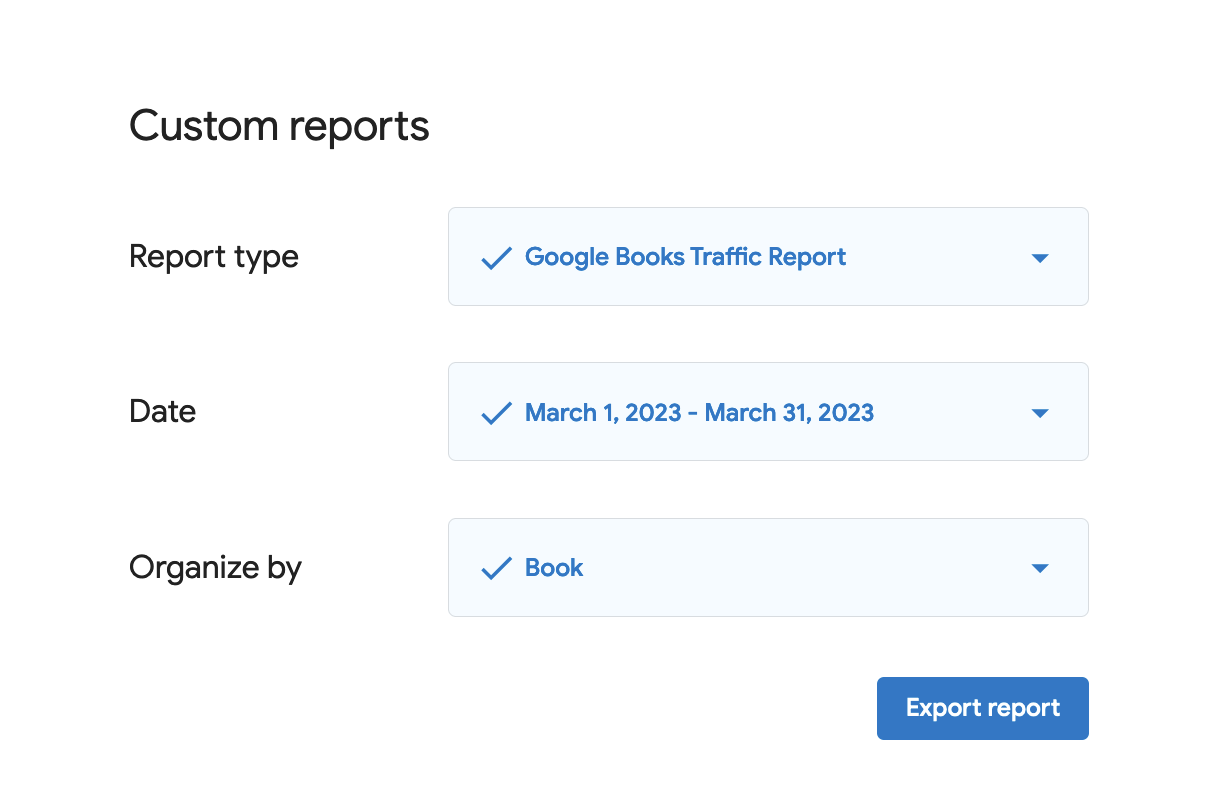

Google Books

Google Books usage data are available via the Google Play Books Partner Center.

- Delete header

- Append 1 column to the left, add date in YYYY-MM-01 format

- save as XLS

- re-export as CSV

- Upload into phpMyAdmin table "GoogleBooks"

Internet Archive

Usage data are directly extracted using the Internet Archive usage data API. We have written a script that extracts these data automatically and generates a CSV file, organized by Thoth workId and country per timeframe (usually a month).

In order to generate the data, make sure all workIds of recently published books are added, and select the correct timeframe.

- Import as encoded as UTF-8

- Delete header

- Upload into phpMyAdmin table "InternetArchive"

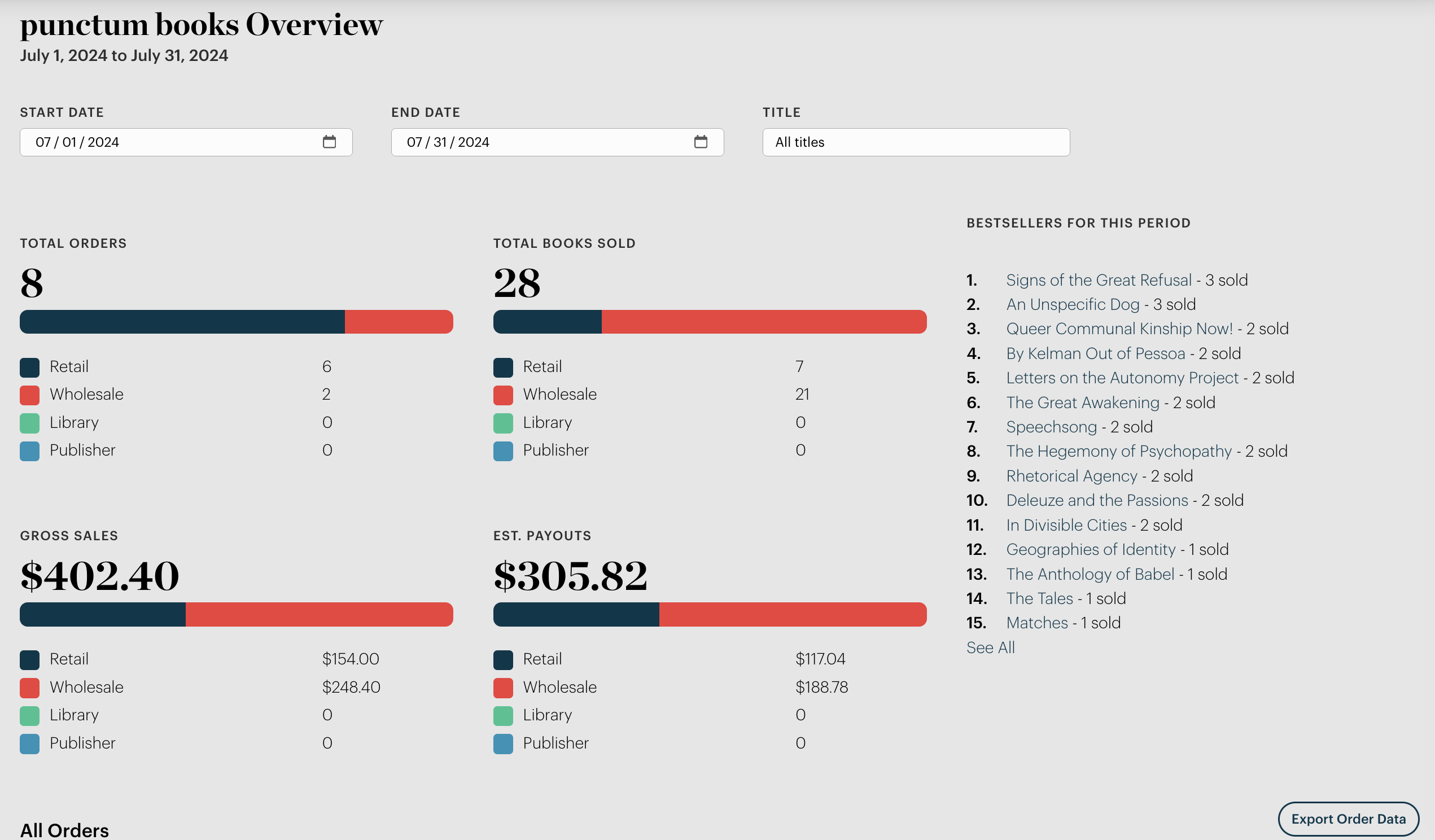

Asterism

Asterism provides monthly order data via their backend as CSV.

- Append 1 column to the left, add date in YYYY-MM-01 format

- Delete all rows up to the list of ISBNs

- Upload to phpMyAdmin table "Asterism"

Thoth CDN

The CDN operated by Thoth provides access to our PDF files (books.punctumbooks.com). The CDN also generates usage metrics. They are provided on a monthly basis by Javi from Thoth Open Metadata until an automated system is developed.

- delete the column event_uri

- in work_uri, remove the DOI prefixes and

.1.00at the end such that only the PBN remains (make sure the format is set to "Text" to preserve the initial zero) - double check that all PBNs are correct (with initial zero)

- delete the rows with the other publishers

- Upload to the phpMyAdmin table "Thoth_CDN"

CrossRef Event Data

CrossRef event data are plucked directly from their Query API. There are two DOI prefixes punctum uses, so we need records for both. Just fill in the correct start and end dates:

https://api.eventdata.crossref.org/v1/events?mailto=vincent@punctumbooks.com&obj-id.prefix=10.53288&from-occurred-date=2023-07-01&until-occurred-date=2023-07-31

https://api.eventdata.crossref.org/v1/events?mailto=vincent@punctumbooks.com&obj-id.prefix=10.21983&from-occurred-date=2023-07-01&until-occurred-date=2023-07-31

You can use Postman as a useful API GUI.

- You get a JSON file that needs to be converted to CSV.

- Clean up the occurred_at column by replacing "T" and "Z" with a space, and set data format to timestamp.

- Make sure all columns line up, and all columns have at least one cell filled (even with a dummy)

- Delete header row

- Upload into phpMyAdmin table "Crossref_Event"

EBSCO Ebooks

The data from EBSCO are prepared once a year and need to be requested by email. Be explicit you want them separated out per month. Email Amy Powers: apowers@EBSCO.COM.

The data comes in basically useless form and needs to be fully reformatted in order to be ingested into our MySQL database. See the example from the 2023–2024 data in the folder.

==

Data import happens through phpMyadmin. The tables are under "datadb."

In file to be imported:

- open file to be imported;

- make sure columns align properly;

- delete table headers;

In phpMyadmin:

- click on the pertinent table;

- select Import tab;

- select file

- click "Go."

Once all data are imported, in Metabase:

- Settings > Admin > Databases (in top menu) > punctum books data

- Click Sync database schema now

- Settings > Exit admin

Document Control

| Document ID | SPM-MET-001 |

| Document Owner | Vincent |

| Version | 1.0 |

| Last Date of Change | October 3, 2025 |

| Next Review Due Date | |

| Version & Change Tracking |